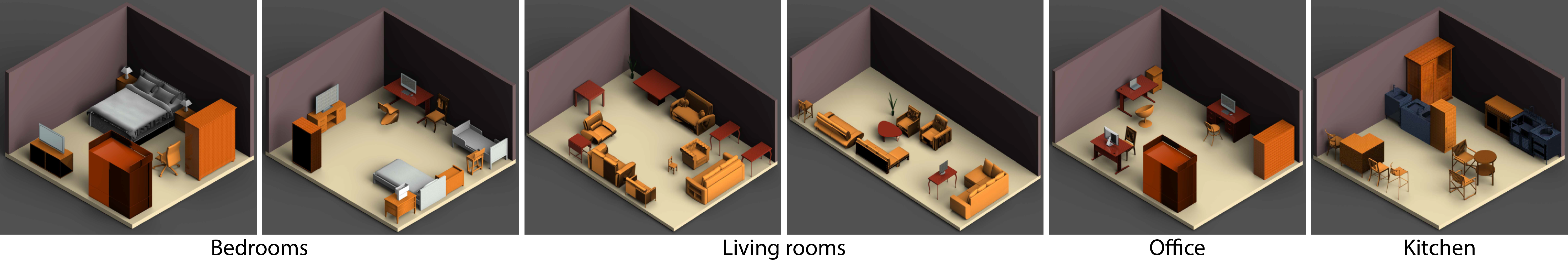

We present a generative neural network which enables us to generate plausible 3D indoor scenes in large quantities and varieties, easily and highly efficiently. Our key observation is that indoor scene structures are inherently hierarchical. Hence, our network is not convolutional; it is a recursive neural network or RvNN. Using a dataset of annotated scene hierarchies, we train a variational recursive autoencoder, or RvNN-VAE, which performs scene object grouping during its encoding phase and scene generation during decoding. Specifically, a set of encoders are recursively applied to group 3D objects based on support, surround, and co-occurrence relations in a scene, encoding information about objects&apos spatial properties, semantics, and their relative positioning with respect to other objects in the hierarchy. By training a variational autoencoder (VAE), the resulting fixed-length codes roughly follow a Gaussian distribution. A novel 3D scene can be generated hierarchically by the decoder from a randomly sampled code from the learned distribution. We coin our method GRAINS, for Generative Recursive Autoencoders for INdoor Scenes. We demonstrate the capability of GRAINS to generate plausible and diverse 3D indoor scenes and compare with existing methods for 3D scene synthesis. We show applications of GRAINS including 3D scene modeling from 2D layouts, scene editing, and semantic scene segmentation via PointNet whose performance is boosted by the large quantity and variety of 3D scenes generated by our method.

If you find this work useful for your research, please cite our paper using the Bibtex below:

@article{li2018grains, title={GRAINS: Generative Recursive Autoencoders for Indoor Scenes}, author={Li, Manyi and Gadi Patil, Akshay and Xu, Kai and Chaudhuri, Siddhartha and Khan, Owais and Shamir, Ariel and Tu, Changhe and Chen, Baoquan and Cohen-Or, Daniel and Zhang, Hao}, journal={ACM Transactions on Graphics}, volume={37}, number={}, year={2018}, publisher={ACM} }

We thank the anonymous reviewers for their valuable comments. This work was supported, in parts, by an NSERC grant (611370), the 973 Program of China under Grant 2015CB352502, key program of NSFC (61332015), NSFC programs (61772318, 61532003, 61572507, 61622212), ISF grant 2366/16, gift funds from Adobe and the China Scholarship Council.